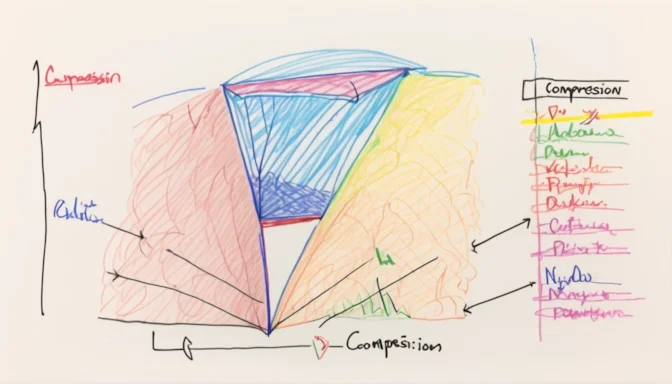

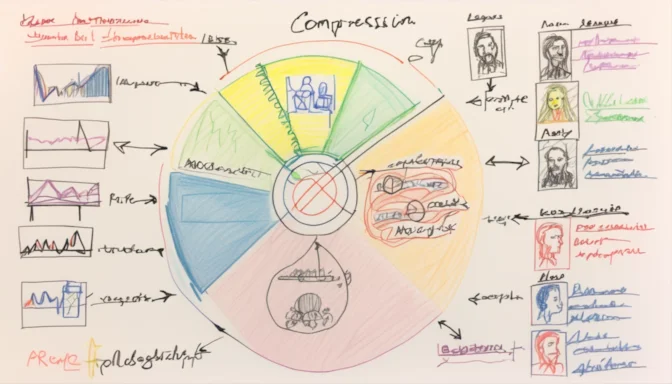

What is a Compression Algorithm?

A compression algorithm is a technique used to reduce the size of a file without eliminating essential information. This process increases the entropy of files, making them appear more random and thereby enhancing storage efficiency.

Main Types of Compression Algorithms

Compression algorithms are primarily categorized into lossless and lossy. Lossless compression ensures the original data can be perfectly reconstructed, while lossy compression may result in some data loss for the sake of higher compression ratios.

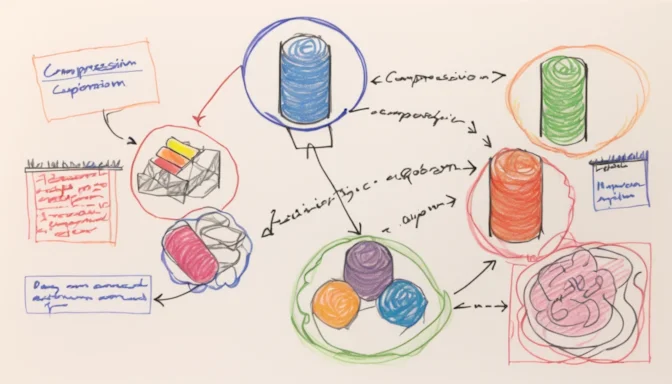

Best Compression Algorithms for Different Use Cases

The Lempel-Ziv-Markov chain algorithm (LZMA) excels in delivering high compression ratios, particularly with large files. For image compression, DCT, as used in JPEG and HEIF, is generally the most efficient.

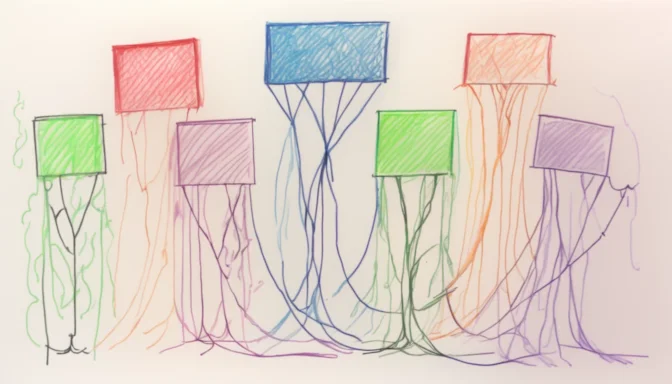

How Does LZ77 Work?

LZ77 is a lossless compression method developed by Abraham Lempel and Jacob Ziv in 1977. It replaces recurring patterns with references to earlier patterns, thereby effectively reducing the file size.

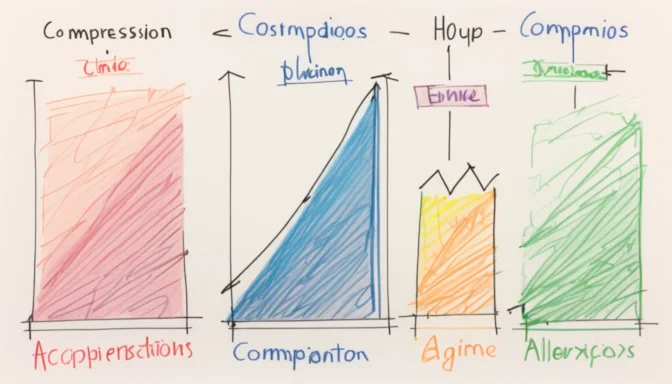

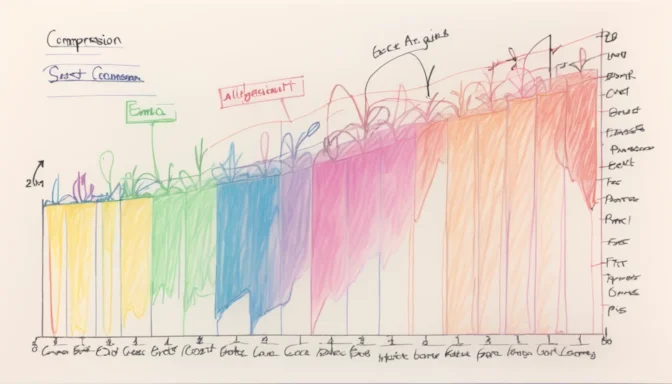

Comparing LZ4 and ZStd

ZStd offers higher compression ratios but at slower speeds, while LZ4 focuses on faster compression at the expense of a slightly lower compression ratio.

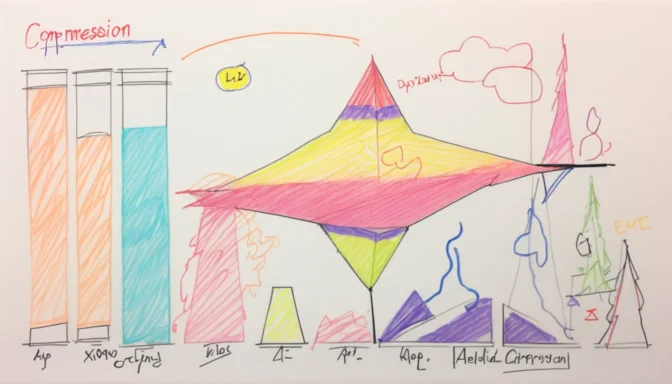

Oldest Compression Algorithms

The Lempel-Ziv-Welch (LZW) algorithm, developed by Abraham Lempel, Jacob Ziv, and Terry Welch in 1977, is considered one of the earliest and foundational algorithms in the data compression field.

Fastest Data Compression Algorithms

LZ4 is renowned for its speed, offering compression at a rate of 400 MB/s per core and scaling effectively with multi-core CPUs.

Exploring Lossless Compression Algorithms

Lossless compression algorithms like LZ77 and LZW enable perfect reconstruction of the original data. These algorithms rewrite the file to be more efficient, which results in a smaller file size without sacrificing crucial information.

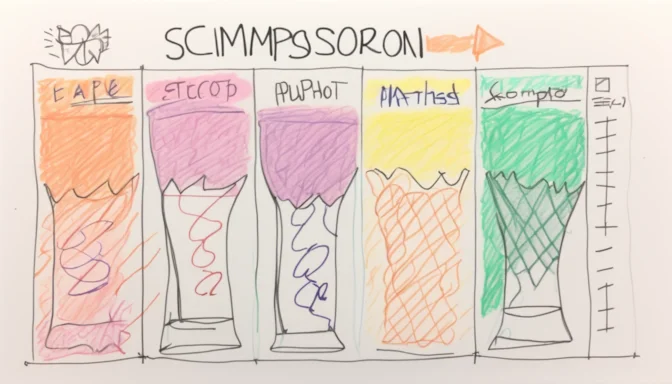

Simplest Compression Algorithms

Run-Length Encoding (RLE) is the simplest form of compression algorithm. While it is easy to implement, its effectiveness varies depending on the data type. More complex algorithms generally offer better compression but are also more complicated to implement.

E-Commerceo

E-Commerceo